Azure Data Factory (ADF) is Microsofts cloud based ETL tool. It is in many ways, better than their old on-premise ETL tool “integration services”. Luckily, you can easily set up ADF to move data around on-premise. In this blog post, I will show you how 🙂

I will assume you already have an ADF running, and some SQL servers running on-premise (Or on your laptop, if you just want to do it, to test ADF features).

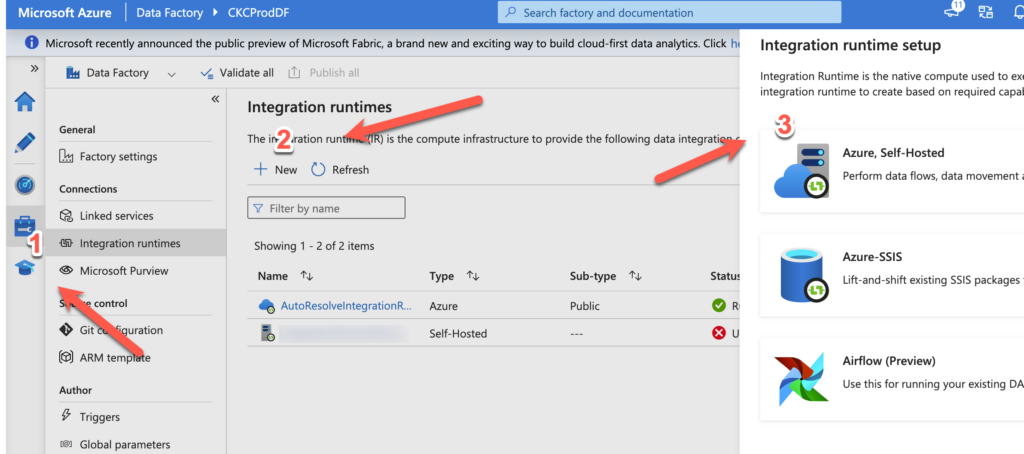

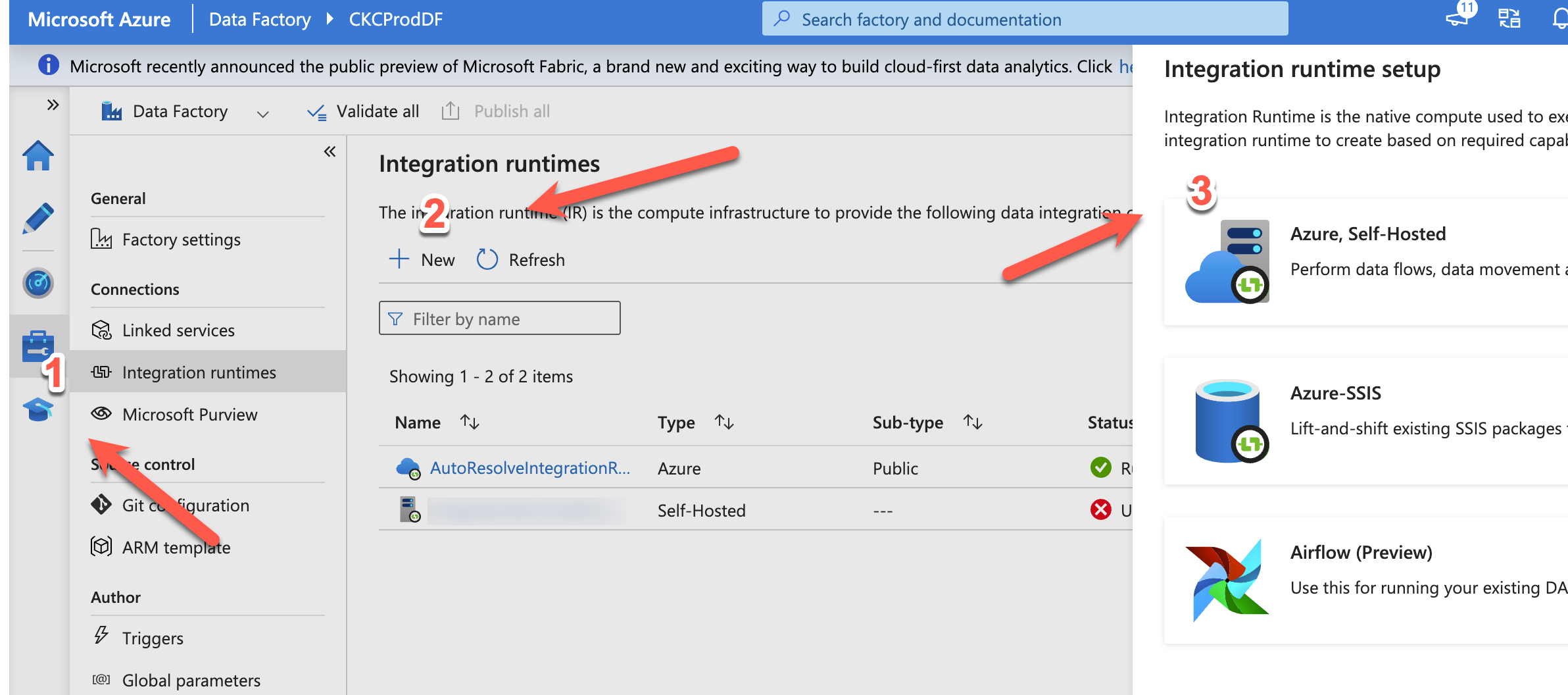

To get started, go to your ADF and

- Click the manage menu on the left, click integration runtimes

- Click New

- Click On, Azure self-hosted

As shown below.

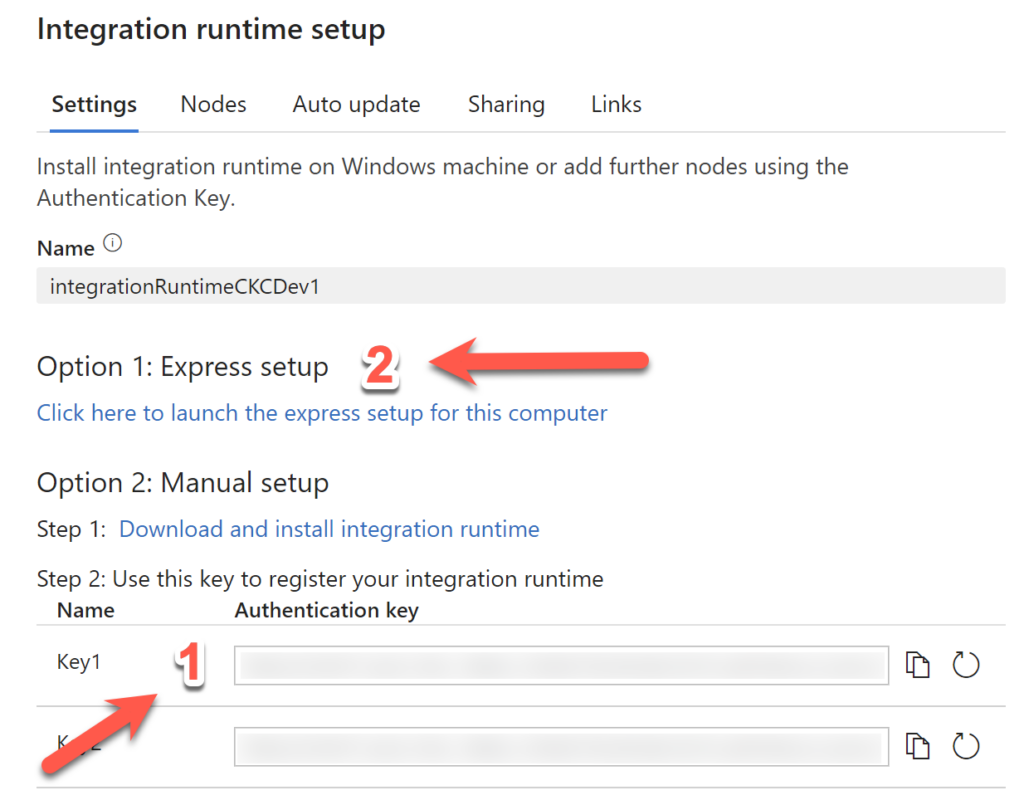

After, click new, give it a good name, and click create.

You will get 2 keys, as shown above(1). Save them a secure place, and click the link with the express setup(2).

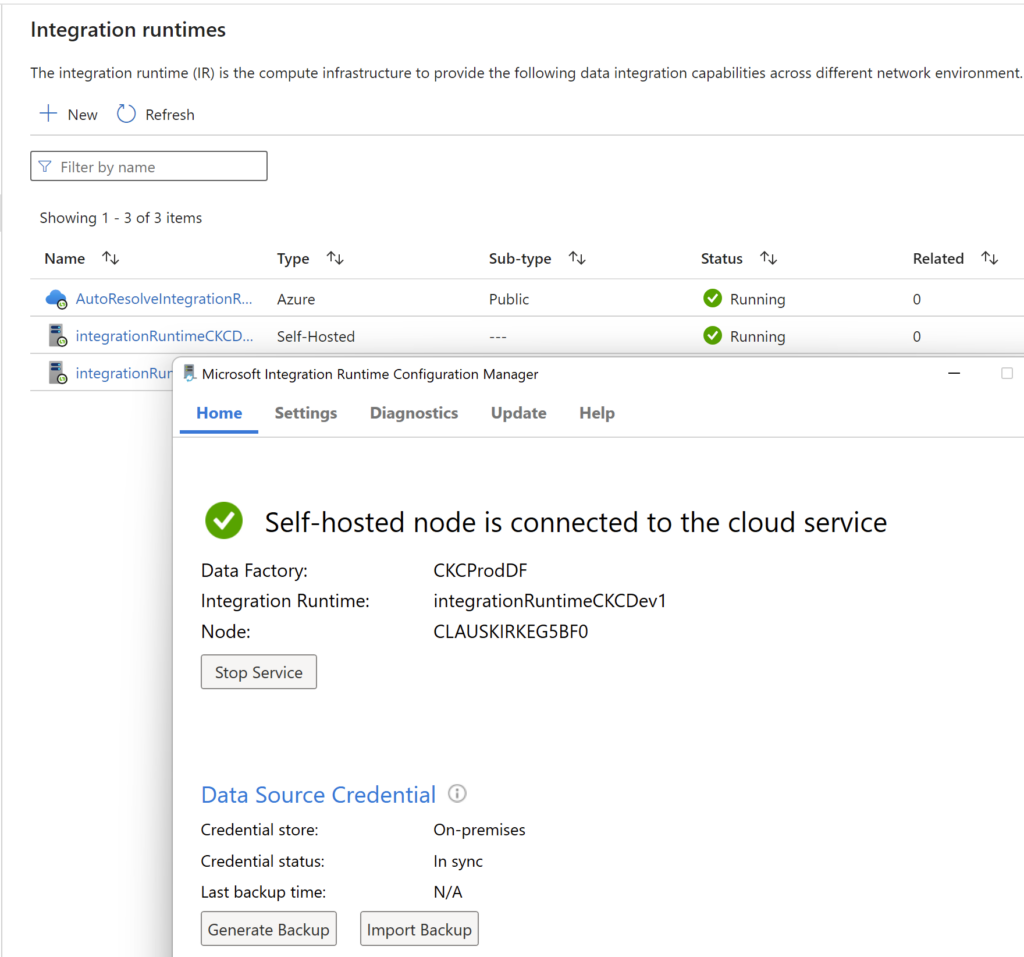

When you do the manual setup, you will need the keys. If you just do the express, you don’t need them. After installation, you should see something like this.

We are now ready to use the on-premise resources.

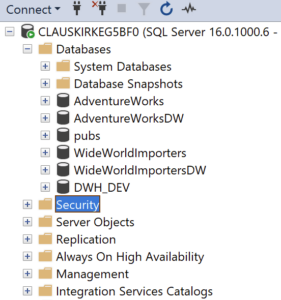

I have a local SQL server with different test databases on it. See below

From ADF I want to copy a table from adventure works to the DWH_DEV database.

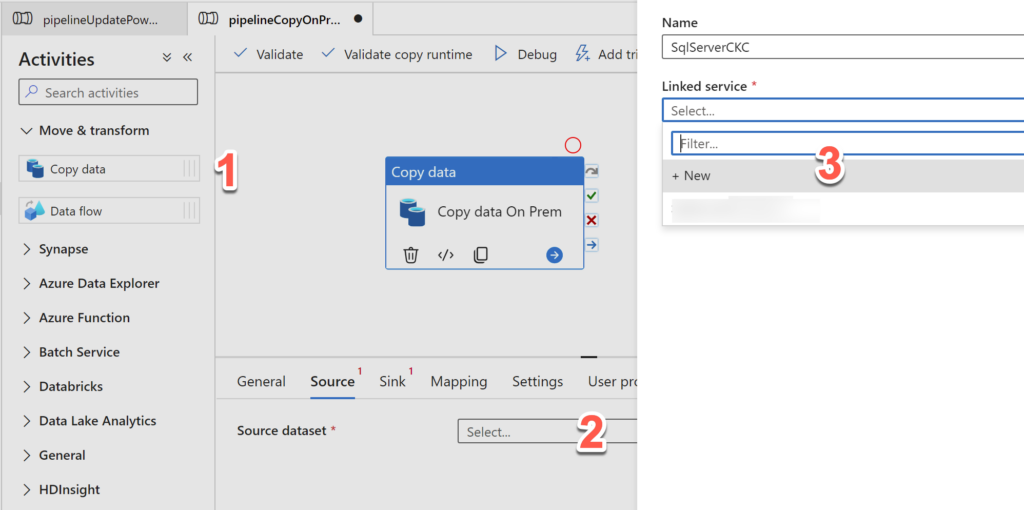

To do that, I create a pipeline, and

- Drag the copy data task in

- Under source, I click new dataset

- I click new linked service

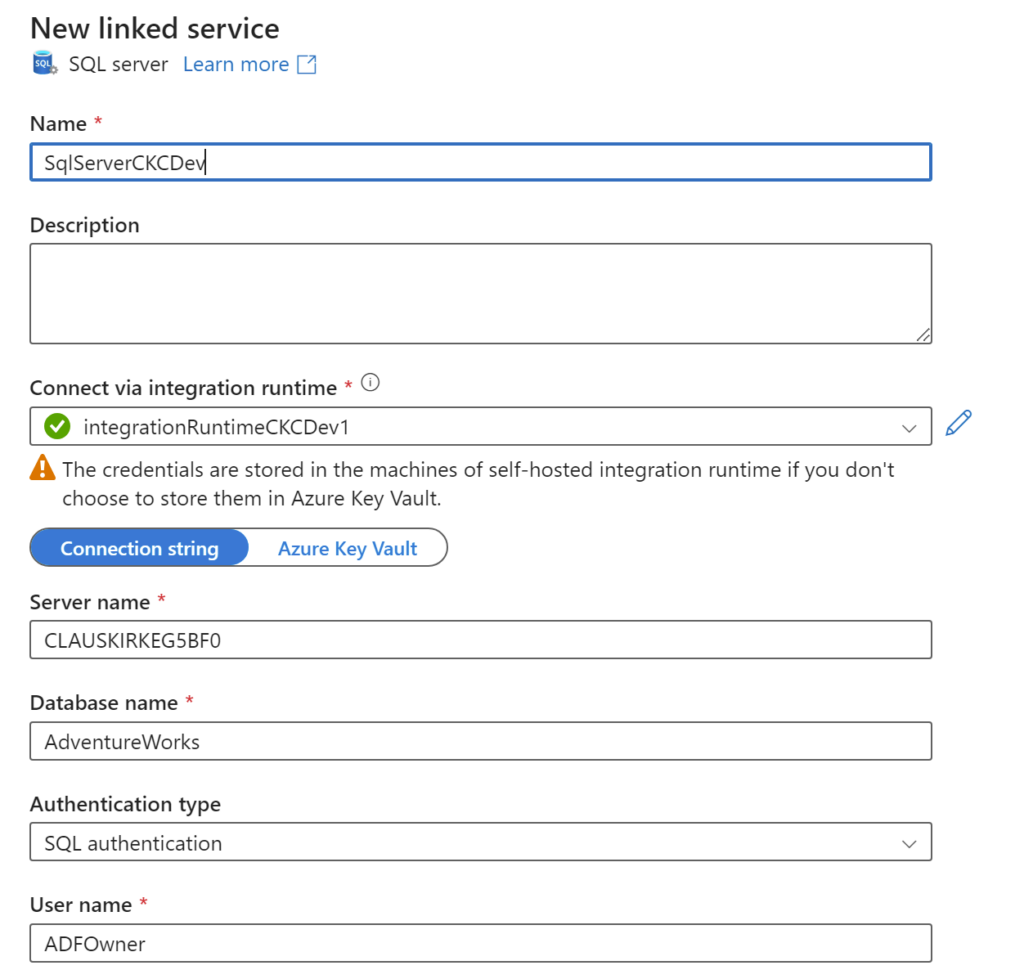

And fill in the connection information

Click test, and select the table you want to copy.

Do the same for the destination database and table.

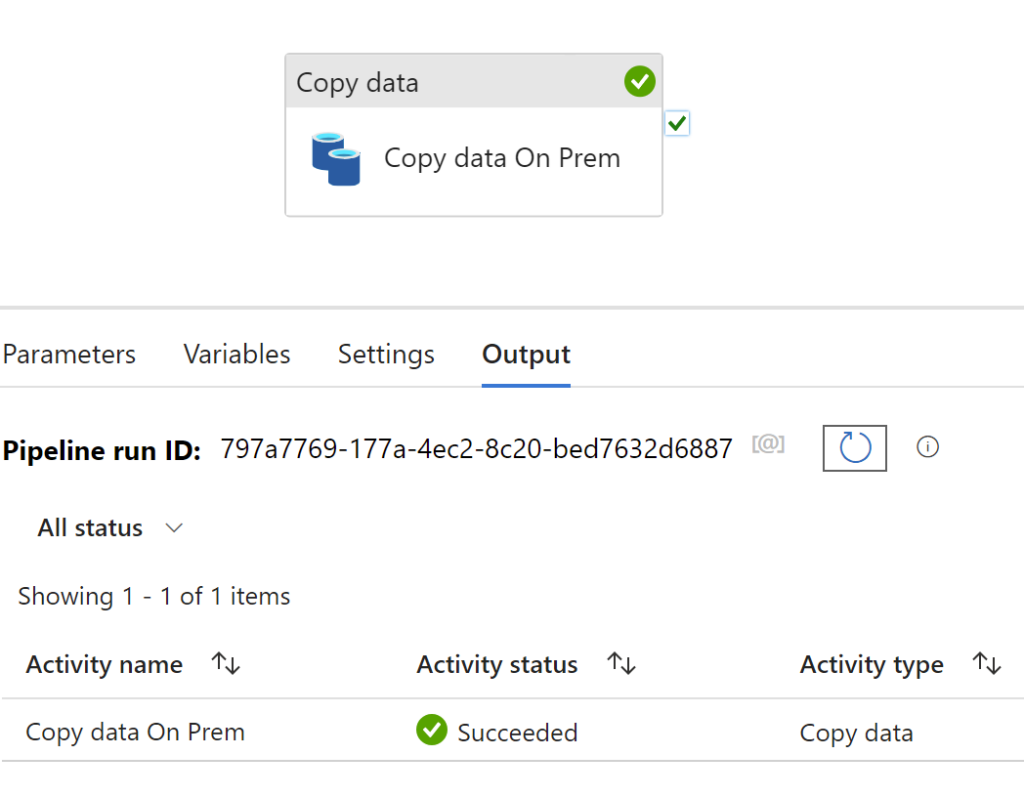

Run the pipeline. You should see a confirmation as below.

You can now copy data with ADF between your databases on-prem, and do all the transformation that is possible i ADF.

You should also be able to do all your testing with ADF as you want, and you dont have to spin up expensive Azure sql servers for all the test databases you want.

I hope this was helpfull 🙂 Enjoy 🙂

Leave a Reply